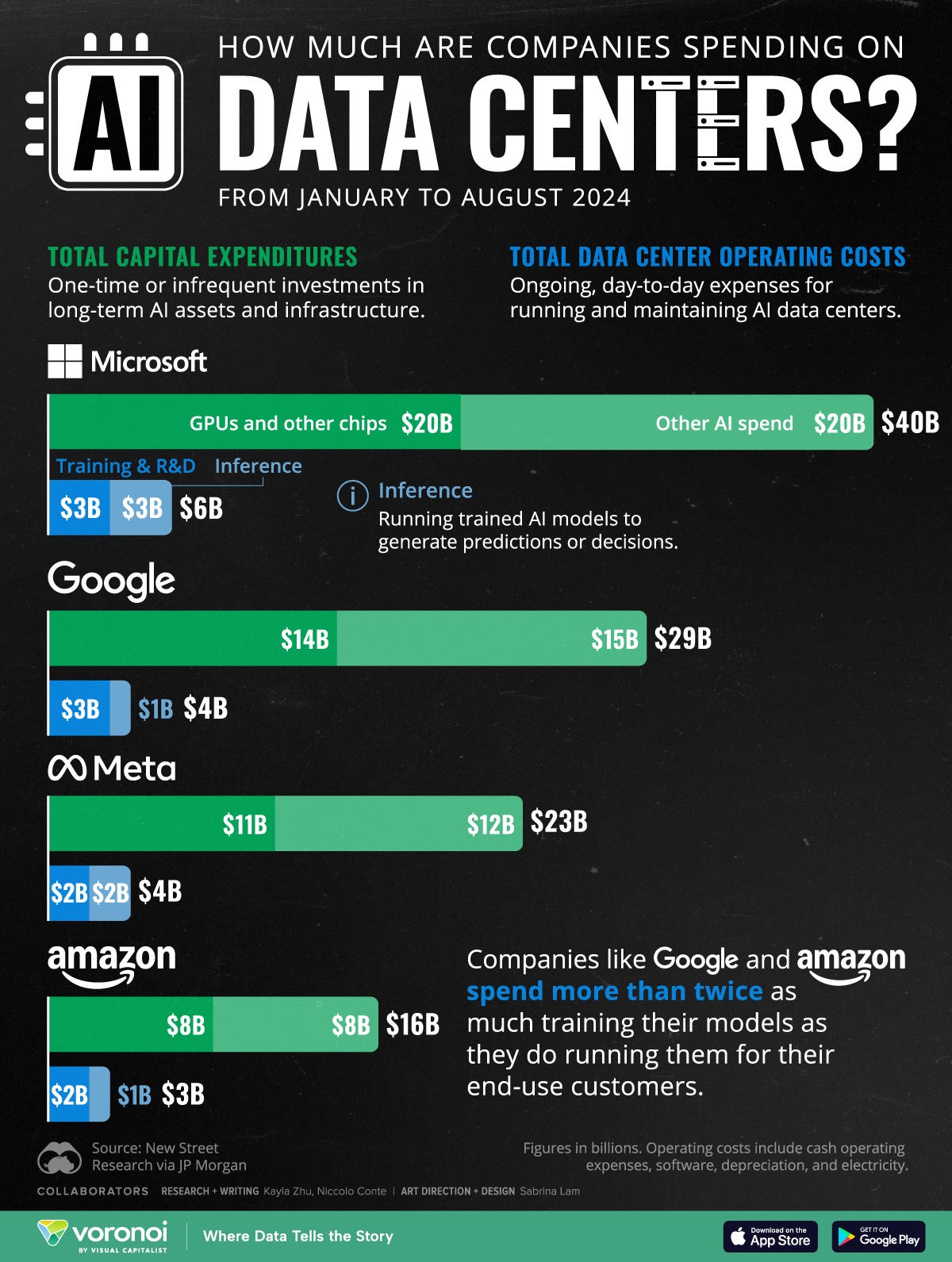

Visualizing Big Tech Company Spending On AI Data Centers

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

By Kayla Zh

Big Tech’s AI Data Center Costs

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

Big tech companies are aggressively investing billions of dollars in AI data centers to meet the escalating demand for computational power and infrastructure necessary for advanced AI workloads.

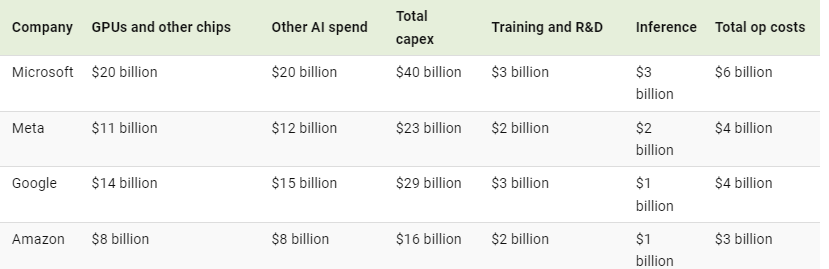

This graphic visualizes the total AI capital expenditures and data center operating costs for Microsoft, Google, Meta, and Amazon from January to August 2024.

AI capital expenditures are one-time or infrequent investments in long-term AI assets and infrastructure.

Data center operating costs are ongoing expenses for running and maintaining AI data centers on a day-to-day basis

The data comes from New Street Research via JP Morgan and is updated as of August 2024. Figures are in billions. Operating costs include cash operating expenses, software, depreciation, and electricity.

Training AI Models Is Eating Up Costs

Below, we show the total AI capital expenditures and data center operating costs for Microsoft, Google, Meta, and Amazon.

Microsoft currently leads the pack in total AI data center costs, spending a total of $46 billion on capital expenditures and operating costs as of August 2024.

Microsoft also currently has the highest number of data centers at 300, followed by Amazon at about 215. However, variations in size and capacity mean the number of facilities doesn’t always reflect total computing power.

In September, Microsoft and BlackRock unveiled a $100 billion plan through the Global Artificial Intelligence Infrastructure Investment Partnership (GAIIP) to develop AI-focused data centers and the energy infrastructure to support them.

Notably, both Google and Amazon currently spend more than twice as much training their models as they do running them for their end-use customers (inference).

The training cost for a major AI model is getting increasingly expensive, as it requires large data sets, complex calculations, and substantial computational resources, often involving powerful GPUs and significant energy consumption.

However, as the frequency and scale of AI model deployments continue to grow, the cumulative cost of inference is likely to surpass these initial training costs, which is already the case for OpenAI’s ChatGPT.

Go paid at the $5 a month level, and we will send you both the PDF and e-Pub versions of “Government” - The Biggest Scam in History… Exposed! and a coupon code for 10% off anything in the Government-Scam.com/Store.

Go paid at the $50 a year level, and we will send you a free paperback edition of Etienne’s book “Government” - The Biggest Scam in History… Exposed! OR a 64GB Liberator flash drive if you live in the US. If you are international, we will give you a $10 credit towards shipping if you agree to pay the remainder.

Support us at the $250 Founding Member Level and get a signed high-resolution hardcover of “Government” + Liberator flash drive + Larken Rose’s The Most Dangerous Superstition + Art of Liberty Foundation Stickers delivered anywhere in the world. Our only option for signed copies besides catching Etienne @ an event.