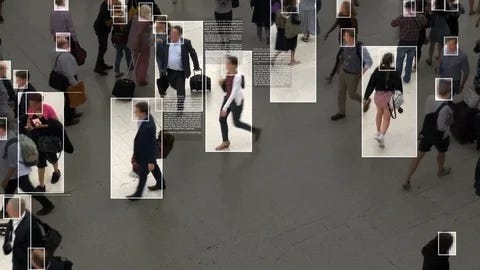

How to Cloak Your Photos from AI Facial Recognition Databases

China’s utilization of AI is a bit scary. This is, in part, because we have seen the most significant improvements of facial recognition technology fueled by cutting edge AI research in China.

China’s utilization of AI is a bit scary. This is, in part, because we have seen the most significant improvements of facial recognition technology fueled by cutting edge AI research in China. In addition to leading the world in both AI development and spending, China currently has the largest and most sophisticated facial recognition network on Earth.

The devil’s advocate argument for the use case of this technology in both the US and China is that it is used to stop crime, terrorist threats and human trafficking. In theory it sounds great, but in real world practical use, these measures usually translate as weaponizing surveillance tools to carefully eliminate dissent and deem all those necessary by the global power elite as the enemies of the state.

In other words, it’s a classic example of the Panopticon of surveillance power. We must ask ourselves: why does the UK government want to know and track the emotions of their own citizens? What practical or beneficial use could this possibly have?

We also know that China has used this clever behavioral engineering technology to profile and incarcerate Uyghur Muslims, in order to target them for detention. There are also clear examples of much more petty, disturbing measures of facial recognition software—such as making sure that Chinese citizens do not take more toilet paper than is needed in a public restroom.

China’s authoritarian use of this AI technology has been well documented. We know that 54% of the world’s CCTV camera’s are located in China and that there is one camera for every two people. If you have a low social credit score in China you are denied any individual freedom, including the ability to travel:

“…your online presence, general behaviour (habits, finances, purchases, hobbies, opinions etc) with facial recognition, body scanning and geo-tracking information to create a full picture of who an individual is and where they are. From this picture is formed a social credit score…once a person has a score, all their credit behaviour in life is recorded and can be evaluated based on that number. Our goal is to ensure that if people keep their promises they can go anywhere in the world. If people break their promises, they won’t be able to move an inch.”

Higher social credit scores also reward dutiful citizens of the CCP. A high score will allow for priority healthcare treatments, the ability to undertake higher education & priority access to the best jobs and property in China.

But the question remains: how will future AI facial recognition technology be implemented in the US? Ideologues and politicians have claimed that the US currently uses this software mostly for benevolent commercial purposes; to sell products, for national security, and for advertising.

This is supposed to comfort us. When your private data is bought and sold by shady data brokers in the US, we are meant to believe this is nothing to worry about and these types of anti-freedom technologies are more steadily being normalized globally.

The Black Mirror of Clearview AI

Law enforcement's use of Clearview AI's facial recognition technology has surged, with searches doubling to 2 million in the past year. CEO Hoan Ton-That revealed to Biometric Update that the company's database now contains 50 billion facial images.

“Clearview scraped the public internet from billions of photos, using everything from Venmo transactions to Flickr posts. With that data, it built a comprehensive database of faces and made it searchable. Clearview sees itself as the Google of facial recognition, reorganizing the internet by face searches and its primary customers have become police departments and now the Department of Homeland Security.”

Clearview AI continues to expand its global reach, launching a trial in Ecuador and establishing a Latin American sales team. In the U.S., the company has gained access to the Tradewinds Solutions Marketplace, allowing it to offer its services to defense sector clients. Gains in the advancement of facial recogntion AI are also being used as a general consensus by mainstream press to indicate the “end of privacy”.

Here’s a quick recap of how Clearview AI works: law enforcement and government officials only need a single photo to be submitted to Clearview’s app and it will easily find every photo of you that has ever been posted on the internet.

The right application of facial recognition AI and rapidly improving deepfake tech could very easily form a theoretical nightmare scenario that has been explored by both Black Mirror and the underrated TV show The Capture.

The latter UK show depicts a disturbing futuristic society where facial recognition scanning AI is combined with perfectly undetectable deepfake technology to completely fake surveillance capture footage, including the placement of people, scenarios and actions that never actually existed. This is then used to frame and exploit enemies to intel and government for their own hidden agendas.

Solutions: How to Cloak Your Social Media Images

If citizens of the US can’t count on legal policy from corrupt politicians to be effective in the abuse of power so clearly at the fingertips of megacorps and government, then it might be time to protect you and your loved ones with some simple actions.

Generally, if you’re looking to evade facial recognition software, it’s important to note that neural networks tend to place a lot of emphasis on the bridge of the nose. Unfortunately wearing glasses won’t be enough to evade the latest cutting edge AI, in most cases.

If you’re weary of showing up to the airport to be interrogated by the TSA in full blown clown makeup—a tactic that can be effective in evading facial recognition—we don’t blame you. Carefully utilizing black paint on the face will tend to disrupt the light and dark color balance of skin and throw off the symmetry of the face which facial recognition AI tends to scan for.

https://invidious.reallyaweso.me/watch?v=tbdcL5Ux-9Y&list=PLW5y1tjAOzI0ZkKQzbc4HLaKLWDm_2VwQ

Long hair styles that utilize bangs can also cleverly mask the bridge of the nose. An infrared camera on the bridge of the nose of glasses might also work, but these methods will also make you a threat in the eyes of the TSA, so in no way do we recommend utilizing these techniques.

If clown makeup and infrared lights won’t work, what will? There are some advanced Python scripts that will work in theory, but in practice this tends to be a dead end.

A more practical solution to evade facial recognition AI was introduced in August 2020; an open source project called Fawkes was released by the University of Chicago’s SAND Lab. Fawkes basically uses a kind of invisible mask for sensitive photos that might already be publicly displayed on social media so big tech can train their fancy new image gen AIs on your private family photos.

You can protect your private images using Fawkes by selecting the images you want to cloak from facial recognition and run them through the system. The software will add noise and other visual elements to effectively cloak the images. After this process, you can safely re-upload your protected images to social media sites or other websites without worrying about unauthorized facial recognition models being able to easily identify them.

Unfortunately, if your images have already been captured and compromised by big tech, this method will not be ideal. The last update on Fawkes was 2022 and it did claim to be effective against Microsoft Azure on that date, so it’s possible that effectiveness still remains in place.

Take Back Our Tech is organic content written by real humans and technologists, we do not AI use for content generation. We report on the latest news in information technology in the lens of individual privacy and freedom, and we aim to provide practical solutions in every piece of content. Subscribe as a paid member to our Substack to support us!

Further Reading

https://open.substack.com/pub/tbot/p/ai-hype-and-the-psychology-of-power?r=2088vv&utm_campaign=post&utm_medium=web&showWelcomeOnShare=true

https://www.theverge.com/22522486/clearview-ai-facial-recognition-avoid-escape-privacy

https://www.wired.com/story/amazon-ai-cameras-emotions-uk-train-passengers/

https://uxdesign.cc/the-curious-case-of-toilet-paper-and-facial-recognition-c204c701fd0f

https://www.theverge.com/2020/8/4/21353810/facial-recognition-block-ai-selfie-cloaking-fawkes

https://invidious.reallyaweso.me/watch?v=tbdcL5Ux-9Y&list=PLW5y1tjAOzI0ZkKQzbc4HLaKLWDm_2VwQ

https://github.com/Shawn-Shan/fawkes

https://www.businessinsider.com/meta-instagram-facebook-photos-used-in-ai-models-training-2024-5

Go paid at the $5 a month level, and we will send you both the PDF and e-Pub versions of “Government” - The Biggest Scam in History… Exposed! and a coupon code for 10% off anything in the Government-Scam.com/Store.

Go paid at the $50 a year level, and we will send you a free paperback edition of Etienne’s book “Government” - The Biggest Scam in History… Exposed! OR a 64GB Liberator flash drive if you live in the US. If you are international, we will give you a $10 credit towards shipping if you agree to pay the remainder.

Support us at the $250 Founding Member Level and get a signed high-resolution hardcover of “Government” + Liberator flash drive + Larken Rose’s The Most Dangerous Superstition + Art of Liberty Foundation Stickers delivered anywhere in the world. Our only option for signed copies besides catching Etienne @ an event.