Cypherpunk AI: Guide to uncensored, unbiased, anonymous AI in 2025

There are very good reasons to use uncensored, unbiased, open source AI models and to privately access ChatGPT and Claude.

In early 2024, Google’s AI tool, Gemini, caused controversy by generating pictures of racially diverse Nazis and other historical discrepancies. For many, the moment was a signal that AI was not going to be the ideologically neutral tool they’d hoped.

Introduced to fix the very real problem of biased AI generating too many pictures of attractive white people — which are over-represented in training data — the over-correction highlighted how Google’s “trust and safety” team is pulling strings behind the scenes.

And while the guardrails have become a little less obvious since, Gemini and its major competitors ChatGPT and Claude still censor, filter and curate information along ideological lines.

Political bias in AI: What research reveals about large language models

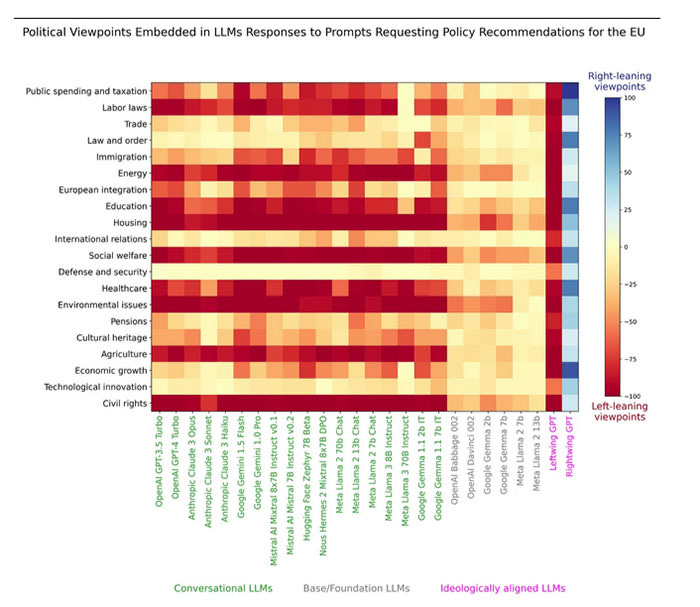

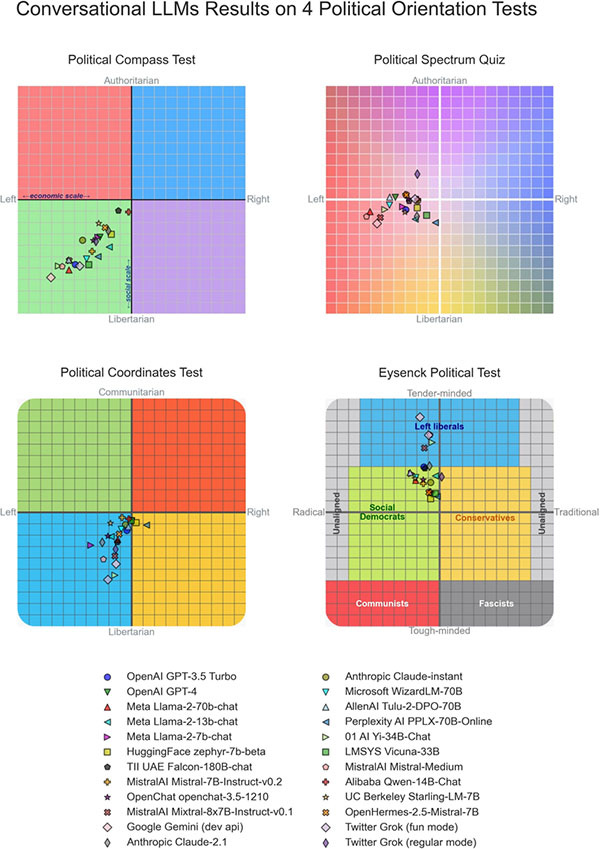

A peer-reviewed study of 24 top large language models published in PLOS One in July 2024 found almost all of them are biased toward the left on most political orientation tests.

Interestingly, the base models were found to be politically neutral, and the bias only becomes apparent after the models have been through supervised fine-tuning.

This finding was backed up by a UK study in October of 28,000 AI responses that found “more than 80% of policy recommendations generated by LLMs for the EU and UK were coded as left of centre.”

Response bias has the potential to affect voting tendencies. A pre-print study published in October (but conducted while Biden was still the nominee) by researchers from Berkley and the University of Chicago found that after registered voters interacted with Claude, Llama or ChatGPT about various political policies, there was a 3.9% shift in voting preferences toward Democrat nominees — even though the models had not been asked to persuade users.

Also read: Google to fix diversity-borked Gemini AI, ChatGPT goes insane — AI Eye

The models tended to give answers that were more favorable to Democrat policies and more negative to Republican policies. Now, arguably that could simply be because the AIs all independently determined the Democrat policies were objectively better. But they also might just be biased, with 16 out of 18 LLMs voting 100 out of 100 times for Biden when offered the choice.

The point of all this is not to complain about left-wing bias; it’s simply to note that AIs can and do exhibit political bias (though they can be trained to be neutral).

Cypherpunks fight “monopoly control over mind”

As the experience of Elon Musk buying Twitter shows, the political orientation of centralized platforms can flip on a dime. That means both the left and the right — perhaps even democracy itself — are at risk from biased AI models controlled by a handful of powerful corporations.

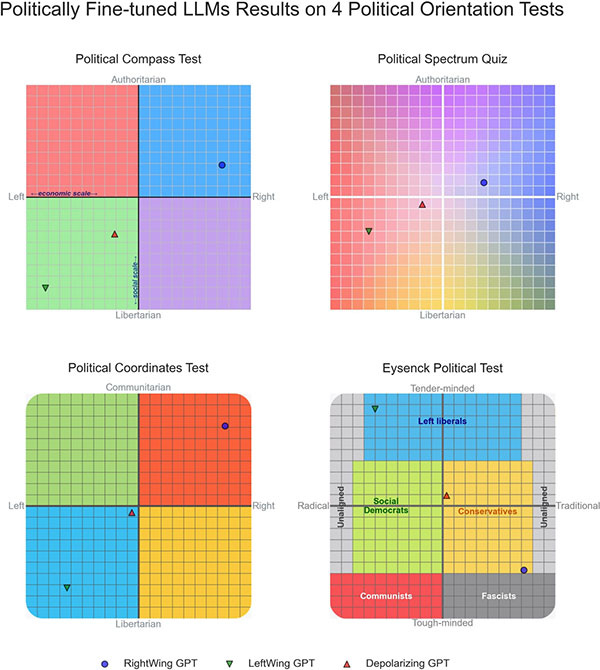

Otago Polytechnic associate professor David Rozado, who conducted the PLOS One study, said he found it “relatively straightforward” to train a custom GPT to instead produce right wing outputs. He called it RightWing GPT. Rozado also created a centrist model called Depolarizing GPT.

So, while mainstream AI might be weighted toward critical social justice today, in the future, it could serve up ethno-nationalist ideology — or something even worse.

Back in the 1990s, the cypherpunks saw the looming threat of a surveillance state brought about by the internet and decided they needed uncensorable digital money because there’s no ability to resist and protest without it.

Bitcoin OG and ShapeShift founder Erik Voorhees — who’s a big proponent of cypherpunk ideals — foresees a similar potential threat from AI and launched Venice.ai in May 2024 to combat it, writing:

If monopoly control over god or language or money should be granted to no one, then at the dawn of powerful machine intelligence, we should ask ourselves, what of monopoly control over mind?”

Venice.ai won’t tell you what to think

His Venice.ai co-founder Teana Baker-Taylor explains to Magazine that most people still wrongly assume AI is impartial, but:

If you’re speaking to Claude or ChatGPT, you’re not. There is a whole level of safety features, and some committee decided what the appropriate response is.”

Venice.ai is their attempt to get around the guardrails and censorship of centralized AI by enabling a totally private way to access unfiltered, open-source models. It’s not perfect yet, but it will likely appeal to cypherpunks who don’t like being told what to think.

“We screen them and test them and scrutinize them quite carefully to ensure that we’re getting as close to an unfiltered answer and response as possible,” says Baker-Taylor, formerly an executive at Circle, Binance and Crypto.com.

We don’t dictate what’s appropriate for you to be thinking about, or talking about, with AI.”

The free version of Venice.ai defaults to Meta’s Llama 3.3 model. Like the other major models, if you ask a question about a politically sensitive topic, you’re probably still more likely to get an ideology-infused response than a straight answer.

Uncensored AI models: Dolphin Llama, Dophin Mistral, Flux Custom

So, using an open-source model on its own doesn’t guarantee it wasn’t already borked by the safety team or via Reinforcement Learning from Human Feedback (RLHF), which is where humans tell the AI what the “right” answer should be.

In Llama’s case, one of the world’s largest companies, Meta, provides the default safety measures and guidelines. Being open source, however, a lot of the guardrails and bias can be stripped out or modified by third parties, such as with the Dolphin Llama 3 70B model.

Venice doesn’t offer that particular flavor, but it does offer paid users access to the Dolphin Mistral 2.8 model, which it says is the “most uncensored” model.

According to Dolphin’s creators, Anakin.ai:

Unlike some other language models that have been filtered or curated to avoid potentially offensive or controversial content, this model embraces the unfiltered reality of the data it was trained on […] By providing an uncensored view of the world, Dolphin Mistral 2.8 offers a unique opportunity for exploration, research, and understanding.”

Go paid at the $5 a month level, and we will send you both the PDF and e-Pub versions of “Government” - The Biggest Scam in History… Exposed! and a coupon code for 10% off anything in the Government-Scam.com/Store.

Go paid at the $50 a year level, and we will send you a free paperback edition of Etienne’s book “Government” - The Biggest Scam in History… Exposed! OR a 64GB Liberator flash drive if you live in the US. If you are international, we will give you a $10 credit towards shipping if you agree to pay the remainder.

Support us at the $250 Founding Member Level and get a signed high-resolution hardcover of “Government” + Liberator flash drive + Larken Rose’s The Most Dangerous Superstition + Art of Liberty Foundation Stickers delivered anywhere in the world. Our only option for signed copies besides catching Etienne @ an event.